Findings

What works:

There’s more evidence that women in elected office reduces corruption, this U4 policy brief reviews the data and suggests that it’s partly because of elected women tend to be more risk averse when it comes to engaging corruption, and because their political agendas tend to emphasize tackling corruption that negatively impacts women.

Formal incorporation of transparency and accountability principles increased the effectiveness and efficiency of public procurement in Maluku province of Indonesia, according to statistical analysis of survey data (n=79), while findings that FOIA legislation increases government transparency were confirmed by the replication of a field experiment, this time in Dutch and US municipalities, through differences in effects also emphasize the important determinate effect of local culture, political and legislative context.

This dissertation provides evidence that direct democracy improves women’s participation and political activism among ethnic minorities, based on quasi experiment and analysis of survey data in Sweden, Switzerland and the US.

What doesn’t work:

This close study of the Kenyan Open Data Initiative suggests that the absence of fundamental conditions like legal frameworks and goodwill contributed to the mechanisms that failed to create healthy supply/demand dynamics and support the institutionalization of open data policy. It’s way deep in theoretical weeds, so you’ll need to slog carefully through to extract the useful bits (the role of engagement initiatives, budget allocations, etc).

Reading Philippines panel data on community driven development programs in light of participatory budgeting suggests that CDD programs fail due to elite capture.

Information intermediaries are part of the problem of political exclusion. This from the application economics principles to political information flows in the US, which explains why low-income communities are also less exposed to political information, under-served by accountability journalism, and less politically engaged (no data).

Mixed messages:

E-government might reduce corruption, fail to do so, or be counter-productive. The devil is in the details, but Nasr G. Elbahnasaway’s review of the evidence base suggests the importance of bespoke approaches, strong political leadership, collaboration and legislative frameworks. On the other hand, this paywalled chapter suggests that “Empirical findings reveal that ICT does indeed have a significant role in reducing corruption.”

Participatory mechanisms are effective at increasing social trust but not political trust (trust between citizens vs trust in government), according to a survey of participants in the Estonian Citizens Assembly (n=847) (conference paper, not peer reviewed). Open data portals only rarely impact the processes and cultures of city government, and product based outcomes (apps, websites, innovations) are more prominent in both expectations and in fact, according to interviews with managers of open government projects in 15 US cities. Oh, and the Chinese government is consulting it’s citizenry, but is “more responsive to street-level implementers than to other social groups”, according to analysis of 2% of 7,899 online comments to healthcare reform.

People with offline experience engaging government are less active on mobile open gov platforms, according to this survey of platform users in the Austrian city of Linz (paywall, no methods data in abstract), but it’s hard to say what that implies for the design of gov platforms.

The Duh Desk:

Processing big data isn’t enough to make cities accountable, according to this case study of Turin, they also need to invest in “integrated systems” to improve data quality. Huh.

Government websites suck. We know this, but a “detailed review of 400 state government websites” in the US confirmed that “99 percent fail when scored on their foundational functionality.” Meanwhile content analysis of government social media outreach in Canada suggests that Twitter is better than Instagram for sustained interaction with the public.

Hot Topics

Open Data Stocktaking:

Charalabidis, Zuiderwijk and Janssen have a new book on The World of Open Data, with chapters on life cycles, infrastructure, policies, interoperability, biz models, and areas for future research. Meanwhile, the State of Open Data project is about to release over 30 chapters on the last 10 years of the open data movement (I have a chapter on civil society). Plus, there’s lots of useful how-to stuff for open data further down, under Resources and Data.

All the artificial intelligence:

Lots of reports this month. ILDA has a report on government AI in Latin America (four cases in Argentina and Uruguay), Berkman centre has a report on AI and human rights and Citizen Lab has a report on AI and automated decision-making in Canada’s immigration and refugee systems (it might violate rights).

There’s practical guidance too. GovEx has a Ethics & Algorithms Toolkit to help governments manage risk. USAID has a guide on using AI in development programming, while anItalian law professor has proposed this human rights-based methodology for self-assessing the ethical impacts of AI data processing (conceptual article, dirty details forthcoming).

Resources and Data

How To’s:

This handbook from @Global_Witness presents “10 methods for using data from oil, gas and mining projects to check whether companies are paying the right amount to governments. Each method features ‘real life’ case examples to illustrate how this can be done.”

@timdavies and Duncan Dewhurst lay out a step by step method for linking project and contract data to achieve transparency for infrastructure and construction projects, plus, @opencontracting has guides on using R for OCDS (en español) and Visualizing OCDS with Kibana.

The GSM Toolkit for Researching Women’s Internet Access and Use “has been designed to help researchers collect comparable, accurate data that is reliable and valid, by using example questions, for both qualitative and quantitative research approaches.”

Sunlight’s newly launched Open Data Policy Hub “is a ‘one-stop shop’ for drafting, crafting, and enacting open data policy,” rankings of corporate respect for digital rights is now available in six languages, and the GovLab has produced a methodology for assessing and segmenting open data demand. Most of this was overshadowed by the fact that Google has a new search engine for open data.

Wading into the assessment weeds, Lithuanian researchers have proposed an evaluation framework to measure how governments consolidate regulatory services in delivering e-government.

Data sources:

The latest Open Data Barometer is out, and shows that the state of open data isn’t great, but that some countries are making real progress. Specifically, and despite lagging performance by early leaders, the 30 governments that have made specific global commitments and been included in this years barometer are doing decidedly better in opening data than countries that have not.

Whoa. There’s a Disinformation Resilience Index, which is quantitative assessment of Eastern and Central European countries’ exposure to Kremlin-led disinformation and the level of national resilience to disinformation campaigns.

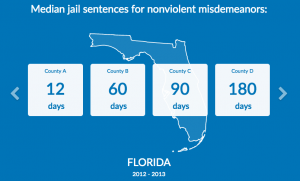

Lastly, there’s a new initiative aiming to “measure every stage of the criminal justice process across the 3,000+ counties in the United States.” The methods and the data are both impressive, so are some of the discrepancies:

Useful Research

It’s always nice to see NGO research get used. This blogpost describes how Global Witness used MySociety’s database on politicians to build a tool for automatically red-flagging corruption risks.

Concepts and Case studies

- Digiital feminism in Fiji (so much potential)

- Govt social media in Bahrain (ppl use it)

- Open government in Sweden (you keep using that word, I do not think it means what you think it means)

- Participatory Budgeting and Urban Development in Mozambique (empowerment is ” stratified upon territorial asymmetry”)

- 20 years of e-gov in Kazakhstan (“non-linear and multidimensional”)

- Kenyan Judiciary Open Government readiness assessment (paywall)

- Digital India and Its Impact on Indian Economy (financial literacy still matters)

- Using the internet to grow UK political parties (younger and more diverse)

- Code for Australia fellowships with local councils (co-creation makes it better)

- Crowdsourcing in Polish municipalities (gov thinks it’s useful)

- E-parliament in Jordan (there’s a ways to go)

- E-government in Jordan (digital literacy matters)

- Using open data to rank the human development of Indonesian cities (it’s complicated)

- Usage of open gov data in Bangladesh (expectations and social influence matter)

- A dissertation on the internet and women’s activism in Iran (more opportunities)

- NGO accountability and resilience (there’s lots of strategies)

- Authoritarianism and digital surveillance in the west (mutually supporting)

- Chat bots for open data (for better decision-making, plus AI)

- 3-D and virtual maps for participatory processes (functionality reshapes everything!)

- Accountability journalism bots (yes, you read that right, in the CPJ no less)

Community & Curation

State of play

GovLab’s describes Data collaboratives, their functions and objectives, Hollie Russon Gilman predicts what civic tech will look like come 2040. Data colonialism is the first in a series of articles under a special issue on Big Data from the South, and is free for a month, and the Center for Media, Data and Society has curated lectures and essays on Public Service Media and fake news. Erhardt Graef condenses his call foe Empowerment-based Design in Civic Technology to 22 pages – important stuff.

You can access all 11 papers presented at this year’s Open Data Research Symposium , with a focus on (localizing country commitments, trends in LatAm), sectors (transit, procurement, public services, health), countries (gender in Kosovo, Indonesia, Tanzania), and ecosystems (growth factors, journalist intermediaries).

Reflecting on this review of ICT4D research, Wayan Vota suggests that the field could best improve by creating a shared conceptual framework, engaging with practitioners, and de-fetishizing ICTs (my words).

Duncan Green is collecting evidence of the SDGs’ impacts. He’s also summarized recent research on closing civic space (which suggests that it’s not closing so much as changing, with changing rules for who’s in and what’s allowed), and asks for input on what other research should be done. Meanwhile, @citizenlabco suggests 10 Must-Read Books about Digital Democracy.

In the Methodological Weeds

Duncan Green reflects on using repeated interviews to build trust and measure change (local researchers, same subjects). Lots of interesting asides on ethics, data management, government collaboration.

@dparkermontana reflects on how participant observation methods for assessing political communication need to be adapted to a social media context, and the ethics in particular.

The NYT has a case-based explainer on why randomization matters in program evaluation, and a study in which 29 teams queried the same data set to answer the same research questions suggests that yes, statical analysis looks fancy but is kind of random.

Spanish researchers go deep into the weeds on proposal recommendation methods in electronic participatory budgeting platforms (which proposals are presented to users so that they can vote them up or down). They distinguish between different recommendation methods (popularity, geographic proximity, textual similarity), and how these align with the municipal contexts in which EPB platforms operate. They base their analysis on three years of data from three US cities, and conduct “experiments,” but I’m not really sure what they did or what their conclusions are. Interesting reading nonetheless…

A bunch of American researchers have produced R packages for publishing and evaluating research designs (blogpost, git). It’s focused exclusively on experimental research, but the concept is just as applicable (and would be just as useful) for qualitative work. As they note: “Generating a complete design likely requires that a researcher gives information about four things: their background model (a speculation about how the world works), their inquiry (a description of what they want to learn about the world, given their model), their data strategy (a description of how they will intervene in the world to gather data), and their answer strategy (a description of the analysis procedure they will apply to the data in order to draw inferences about their inquiry).”

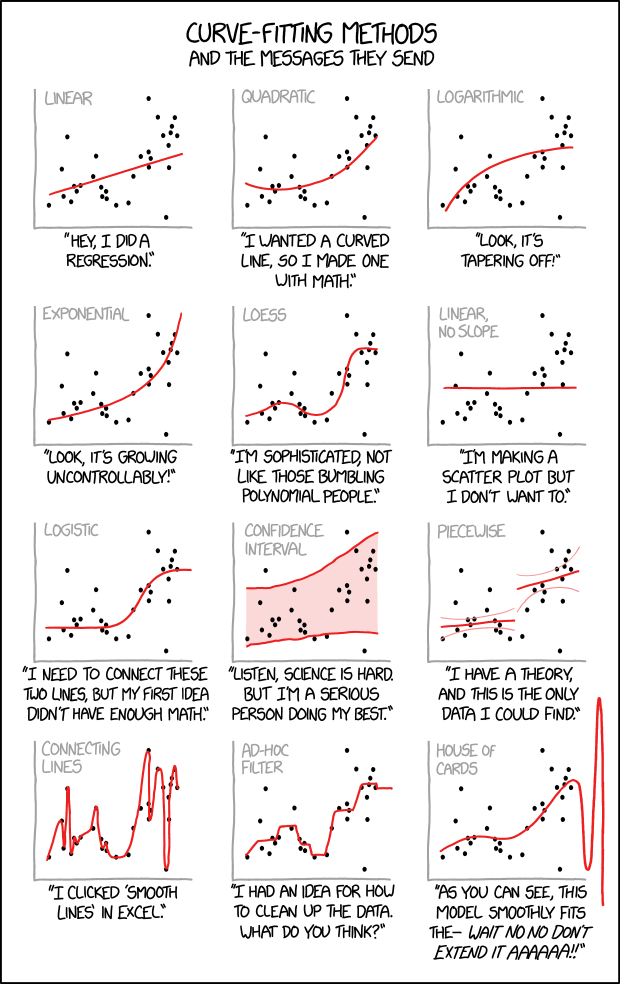

This from XKCD:

Miscellanea and Absurdum

- ‘Disco Sour’: a dystopian handbook for Internet democracy (Citzen Lab)

- The naked body scanner, reviewed (Wall Street Journal)

- A short guide to the history of ’fake news’ and disinformation (ICJ)

- Tanzania is about to outlaw fact checking: here’s why that’s a problem (From Poverty to Power)

- When traditional communications outperform digital technology (Open Democracy)

- According to this editorial, sociologists and political scientists have less policy impact than economists working on the same issues, because they are less open and transparent throughout their research process.

- The e-scooter company Bird has launched a ”gov-tech platform” for scooters in an effort to mollify city regulators. It allows cities to do things like set maximum speeds and no-ride zones.

- New research shows that, post net neutrality, internet providers are slowing down your streaming (News @ Northeastern)