This is the first a series of posts ahead of the OGP Summit in Ottawa, summarizing aspects of my doctoral research on OGP and civic participation. You can find some background on that research here.

We spend a lot of time talking about whether or not global MSIs like the OGP are “working”. These conversations often take place against the backdrop of dissatisfaction with results and flagging enthusiasm, and questions about whether they’re worth funding. And these conversations largely dissatisfying for everyone involved, because the evidence and the tools we have for measuring what works simply aren’t good enough. This is the starting point for my doctoral work, which lays out three alternative strategies for assessing the impact and effectiveness of public governance MSIs, each presented briefly below.

The measurement challenge

I see two main problems with the tools we have for measuring whether or not public governance MSIs “work.”

Firstly, they’re all pretty young, and haven’t necessarily had time to produce long term impacts. As Brockmyer & Fox (2015) suggest “it is simply too soon to expect meaningful evaluations of [MSI] effectiveness or impact” (66).

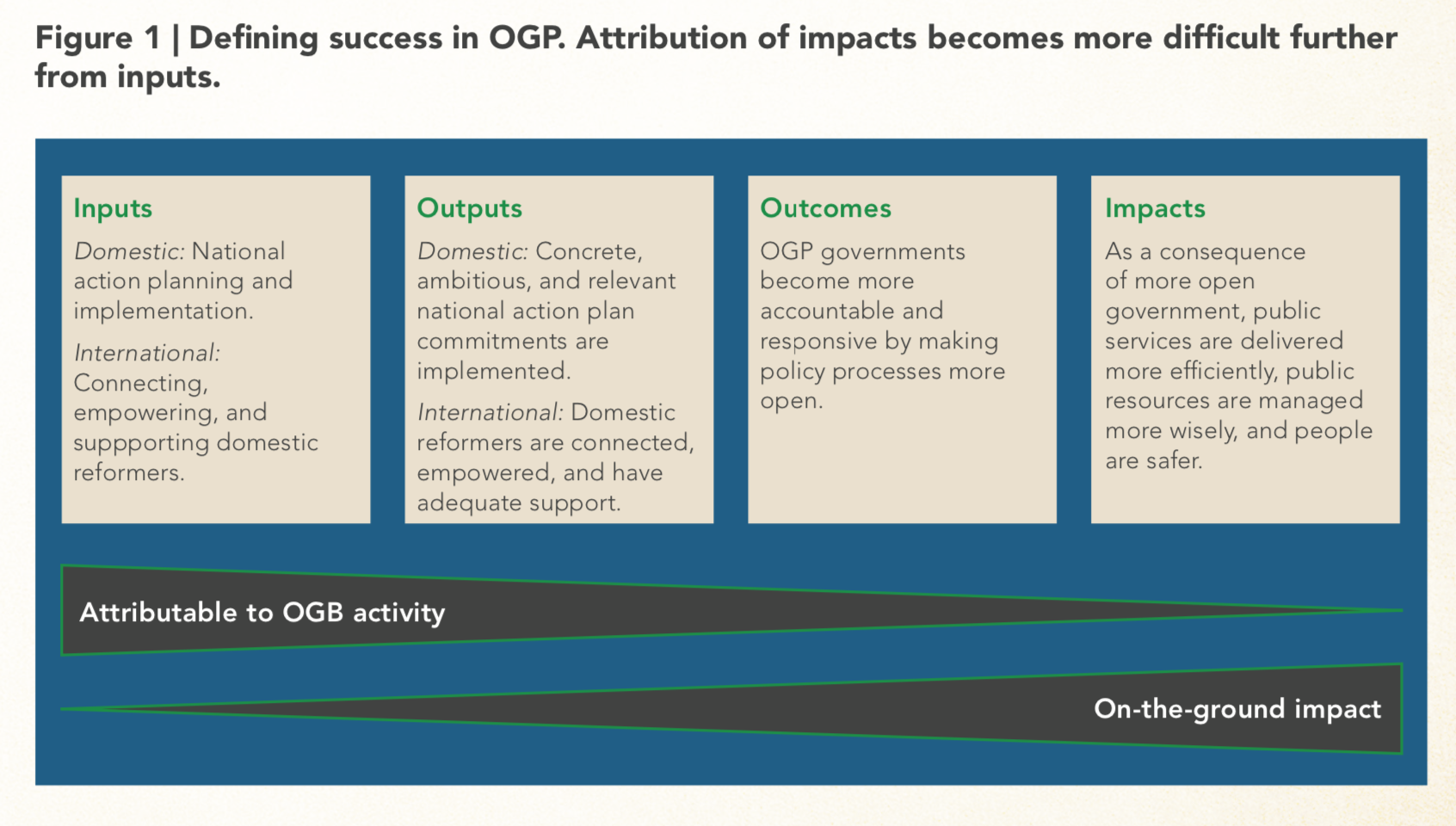

Secondly, even if we waited long enough though, we tend to assess MSIs within pretty specific results chains, and the longer out along that chain we get, the harder it is to attribute outcomes and impacts to the MSI, because all sorts of other stuff happens along the way.

As Foti sums it up:

Alternatives

In response to this, and acknowledging the absence of long-term data in which to seek evidence of impacts, I propose three alternative strategies for assessing public governance MSIs like the OGP.

1. Identify and test for causal mechanisms.

Most MSIs are pretty fuzzy on how they expect to influence country behavior and policy. It’s hard to be specific when countries and political contexts are so different, and when everything you’re doing relies on enthusiasm for something new. So at their best, theories of change take the shape of abstract flow charts and a handful of vague assumptions. The truth is that political change is superlatively messy, affected by the interaction of an infinite number of contextual factors.Spelling out the precise causal mechanisms through which MSIs hope to provoke change in these contexts is hard work, and requires rigorous integration of theory and contextual analysis. But it’s possible, and provides a basic tool for testing whether causal mechanisms are active in a country context, long before the impacts to which they hope to contribute are manifest. You can think of this as a kind of progress report. If I want to move from A to B, instead of simply waiting for the journey to be completed, we can first identify the path (however circuitous it may be), and then check at any given point to see if we’re on it.

I validate this assessment strategy by looking at how OGP exerted causal influence on civic participation policy in Norway through knowledge transfer and policy learning mechanisms. Norway is a good case because it does surprisingly poorly in OGP and there’s a pile of data on which to assess the mechanism (in methodological terms it’s data rich and deviant).

The good news is that it’s possible to develop and test for theoretically grounded causal mechanisms. Interestingly, I also find that you can also measure how they interact (and sometimes conflict) with other mechanisms. The bad news is that this doesn’t get you any closer to definitive attribution. Still, it’s a road check, showing that it’s possible to determine whether specific causal mechanisms are active, and that’s better than waiting for impact or reading the tea leaves of intermediate policy outputs.

2. Measure outside of the results chain.

OGP is built on a theory of change that hopes repeat interactions with civil society will socialize civic participation within government.As norms shift and governments become more comfortable with transparency, governments will begin introducing more opportunities for dialogue and become more receptive to civil society input and participation. (OGP, 2014: 16).If this kind of socialization is successful, then we’d expect to see government norms and culture changing in specific institutions.

There’s lots of ways we might seek evidence of this, including interviews with stakeholders, changes in policy, changes in operations or institutional representation. The vast array of global comparative data on public governance provides a particularly rich source for evidence.My dissertation looked specifically at OGP and civic participation, so I looked at the relationship between OGP membership and countries’ e-participation. This leveraged data from the UN’s E-participation Index for 194 countries, from two years before the launch of OGP, through 2018.

I’ll dig into the details in a later post, but the short version is that I found modest bust statistically significant effects of OGP membership on countries’ e-participation, and data tables suggest that this effect is in fact causal. There’s a lot to say about that, but at base, it’s a fundamental demonstration of how data on policy outside MSI results chain can be used to evaluate the more subtle influence of MSIs on institutional culture and the socialization of governance norms.

3. Rigorously assess the quality of norms promoted.

The most obvious way that MSIs aim to influence government policy and practice is by telling them what to do, either in the form of specific standards, best practices, or abstract norms. Much of the conversation about whether MSIs are “working” circles around the question of whether governments are listening when they are told to do. We would do well, however, to take a close looks at the norms and policies that get promoted and adopted in MSI contexts. Even if they are adopted wholesale and in earnest by governments, can they really be expected to make a meaningful contribution to the end-goals of improving public governance?

One obvious way to answer this question is to evaluate the quality of specific norms and policies. Specifically, how likely is it that those norms and policies will contribute to more responsive and accountable government if they are adopted and implemented by governments, wholesale and in good faith. This is a particularly sticky sticking point for OGP, which has been criticized for giving governments free reign in defining what open government means for them.

To rigorously assess the quality of norms in an OGP context means going deeper than looking at guidelines for co-creation guidelines, of course. To test and validate this approach I went through the participation and accountability literature to devise a framework to identify the design characteristics for participatory policies and activiteis that are most closely associated with contributions to responsive and accountable government. I then looked at all formal instances where the OGP steering committee or support unit promoted a specific participatory norm or policy (that was a loooooong list), all guidelines and guidance notes for developing action plans, and the civic participation commitments in national action plans over a four year period. I took all these norms and policies, and tested them against the quality metrics developed above.

So, according to those quality metrics, how likely is it that the participation norms and policies in an OGP context will contribute to more open and accountable government? Not very. There’s a lot more to say about that in a future post. For now, it’s worth noting that the quality of governance norms and policies can be rigorously assessed, and that this can tell us something about how public governance MSIs are performing.

So those are three alternative strategies for assessing the impact and effectiveness of public governance MSIs, absent clear evidence of long-term impact. All three are validated in my doctoral work and the next couple of posts on OGP ahead of the Ottawa Summit will take a close look at some of the most interesting substantive findings in the dissertation.