This is the second a series of posts ahead of the OGP Summit in Ottawa, summarizing aspects of my doctoral research on OGP and civic participation. Also read a post on assessment strategies, and background on the research.

Soft power and voluntary social dynamics are what make MSI’s like OGP unique. Yet these dynamics are almost completely ignored in the burgeoning body of research on public governance MSIs. This is probably because compliance and impact seem easier to measure, but research on how OGP facilitates socialization and learning is as feasible as it is important. This post presents findings from a case study to demonstrate how. It also suggest three steps to facilitate policy learning in OGP countries, and describes a research agenda for developing predictive theory on how and when policy learning might occur.

The gap

Public governance MSIs like the OGP, EITI and CoST are different from other kinds of international partnerships and cross sectoral initiatives because of the way that they leverage the voluntary participation of governments, and rely on soft social power to do so (Bezanson & Isenman, 2012: 1-2). And if public governance MSIs are inherently soft power initiatives, OGP is likely the softest of the bunch, lacking the common standards for evaluation that ground other MSIs (Brockmyer & Fox, 2015: 34), and explicitly hoping that jumping through MSI hoops will socialize participatory norms in government institutions:

As norms shift and governments become more comfortable with transparency, governments will begin introducing more opportunities for dialogue and become more receptive to civil society input and participation (Theory of Change in an early strategy doc: OGP, 2014: 16).

There is a non-trivial bunch of research emerging around OGP, but to date, most of the case-based research is still best characterized as compliance studies or anecdotal documentation, and the large-n comparative work is generally focused on the process indicators that can be read out of action plans. This type of work is familiar from research on other types of governance advocacy, like transnational human rights advocacy, and the political pressure and persuasive roles of civil society. It doesn’t capture what is unique about OGP, however, that governments join because they want to, and have full discretion to do almost whatever they want upon doing so, and to call it open government.

I get it. It’s hard to rigorously demonstrating and measuring the role and effect of ideas and other mushy stuff in political processes (Jacobs, 2014). But that type of research is doable, any discussion about OGP’s effectiveness and impact fall short without evidence on how and when soft power dynamics are at play. We need more of it.

I took a first stab at measuring soft power in two articles for my dissertation.

- Firstly, comparative analysis measured the effects that OGP membership had on an adjacent policy area (e-participation) and argued that the significant causal effects demonstrated there could be explained by informal changes to institutional culture. More about that in another post, soon.

- Secondly, I ran within-case analysis on a single case study to see how that might happen. I looked at Norway, because it is the most voluntary of voluntary cases, and because despite a close alignment of OGP norms and Norwegian culture, Norway’s performance is consistently poor. This makes it likely that OGP effects will be easier to measure, by cutting down on the false positives of changes to institutional culture that would have happened anyway. Lastly, Norway’s a super data-rich case, because it was a founding member, which institutional evidence stretching back to two years prior to launch, and because I worked there as IRM for two reporting cycles.

The rest of this post focuses on what I learned from that second effort (preprint).

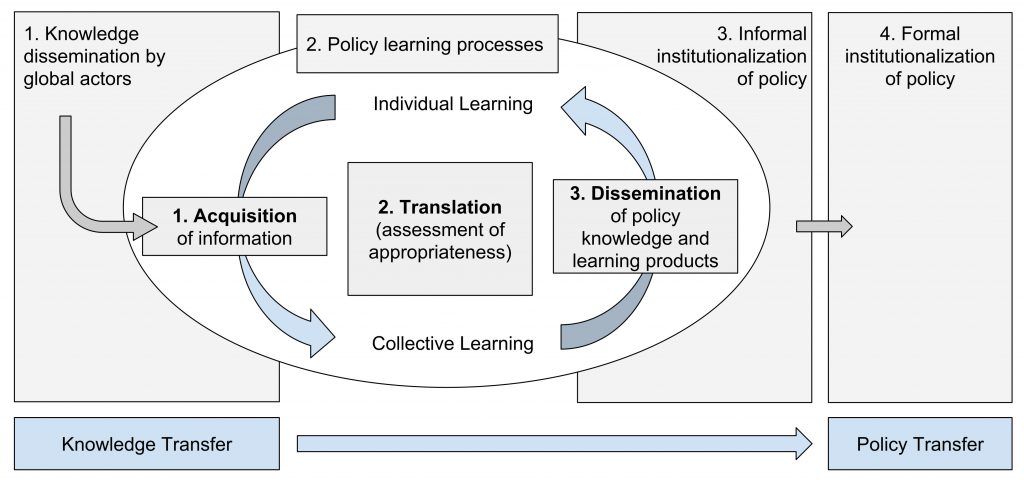

How to measure soft power and socialization in single case research

To measure socialization effects, I started with policy learning dynamics, taking a cue from OGP’s own emphasis on international knowledge exchange and networking. To do so, I relied on the conceptual framework of Heikkila and Gerlak (2013), who demonstrate how policy learning processes “often start with individuals and move up into different levels of subunits of a group” (486), contributing to collective policy learning in institutions, and eventually can also contribute to formal policy outcomes like laws and regulations. Importantly, this model relies on actions of individuals inside of institutions, who receive info, make sense of it, and then share it, facilitating iterative processes of individual and group learning within and across institutions. This aligns nicely with a policy transfer and translation perspective which argues that international advocacy is good at introducing ideas, when then get debated and adapted to national contexts (or don’t, depending on what actual people actually do).

Adapting policy studies literature to the OGP context led to the creation of this analytical framework for policy knowledge transfer and policy learning.

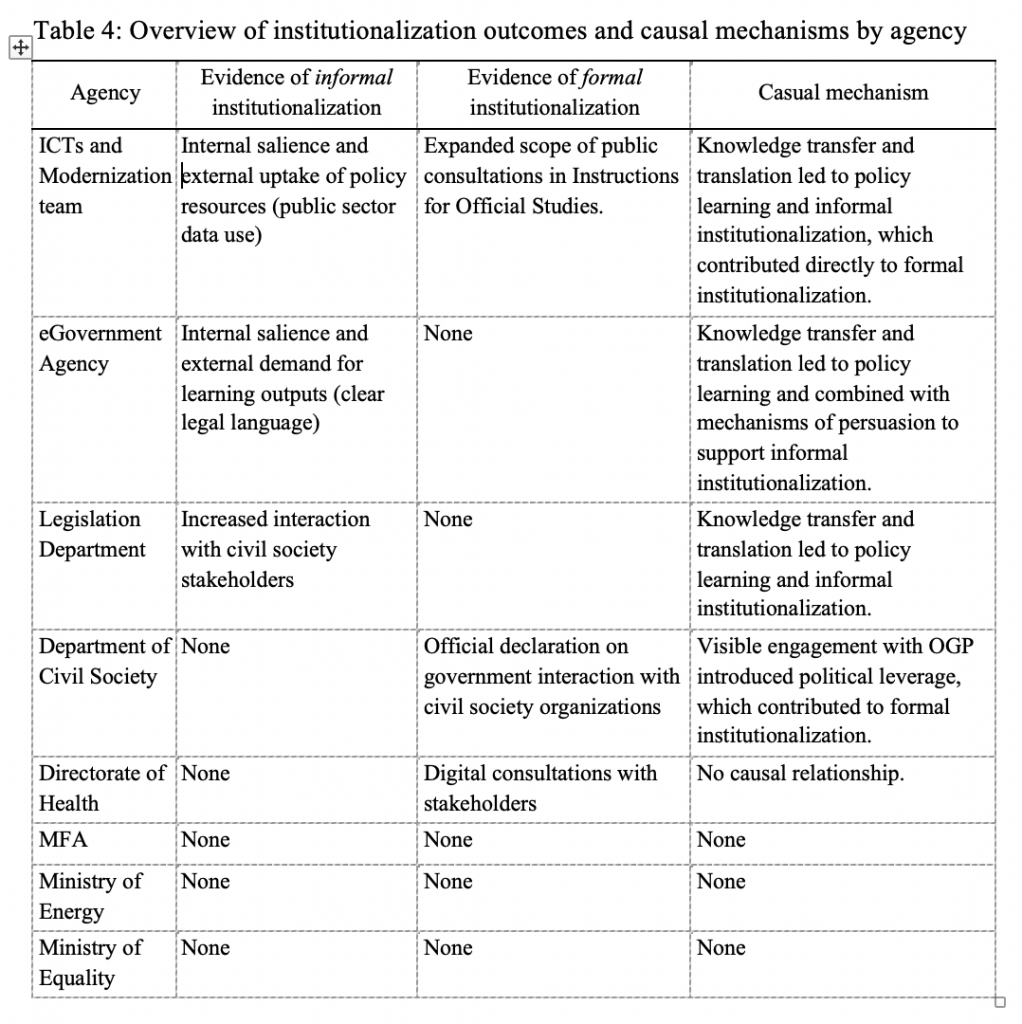

To test whether this framework could explain policy changes associated with OGP in Norway, I dug through OGP-related documents from two action plan cycles, and identified eight government institutions where there was evidence of institutional change that might be attributed to OGP. I then conducted a pile of interviews, and reviewed a bunch more documentation, to measure whether OGP exerted a causal influence, and whether that could be explained with the above framework.

A note on process tracing:

I’ve been asked more than once, “isn’t process tracing just fancy way of describing what happened?” Well, yes, but that doesn’t mean it’s sloppy. It’s worth noting that this was a pretty rigorous approach to process tracing, adhering to the

three part methodological standard asserted by Bennett and Checkel (2015), in which process tracing methods are meta-theoretically grounded, contextually attuned to discursive structures, and methodologically attentive to challenges of multiple causal explanations (20-25). This latter standard implies the use of Bayesian-inspired tests for assessing the veracity of multiple causal explanations as they arise, and is strengthened by the diversity of evidentiary sources described above (Bennett and Checkel 2015, 292–93; Yin 2009, 68, 120–21).

Sorry, I’ll refrain from academic writing for the rest of this post.

The most challenging part of this research was making sense of the tremendous amount of interesting and often conflicting data that was generated. I tried several approaches, including coding software for interview data, and hand coding with spreadsheets according to themes. This made it really hard to apply tests for bias, however. In the end, the iterative approach of “soaking and poking”, essentially conducting the same analysis over and over and trying to poke holes in winning arguments with contrary evidence, proved best. It was kind of painful, but got me deep beneath the skin of the case.

What I learned from Norway

OGP’s influence in Norway was pretty modest, but influence there was. More importantly, I demonstrated that it can be measured, and that it’s possible to differentiate between different causal mechanisms, including mechanism of soft power, like policy learning. Most intriguingly, I also demonstrated that it’s possible to document and measure the interaction of multiple causal mechanisms contributing to policy outputs in an OGP context.

Lastly and most importantly, I learned that this is all super messy. The way that individual policy makers and civil servants experienced OGP and OGP norms varied wildly. They were influenced by social factors at the national, institutional, and personal level, and made decisions about whether to facilitate policy learning according to multiple logics (moral, consequence, and specification [who else is doing similar stuff]. You could think of this as a three by three matrix, to capture the combination of different logics and different levels of influence. Even that would would underscore the complexity though, because these combinations were often radically different for different people working in the same institutions, and a lot of people described more than one combination of influences.

From the perspective of someone who wants to see OGP successfully lead to policy learning and socialization in member countries, this suggests three steps might be important:

- identify which people are the most important gate-keepers and go-between policy intermediaries

- identify which factors are most influential for how they consider OGP norms

- frame OGP norms accordingly

The Norway case shows what happens when those steps aren’t taken, and how little learning can actually happen, in a country where you’d expect all kinds of outcomes.

How is this relevant in other country contexts?

In simple terms, it’s not, but it might be. In order not to get wonky, this will be brief. These findings can’t be immediately generalized in the same way we generalize statistical findings from a representative sample.

This analysis did provide a basis for analytical generalization, however. Specifically, it

- produced two theoretical premises about MSI policy learning,

- provided a roadmap for testing those premises in other cases, using contrast typoligies whereby countries differ on key variables, including alignment of national political culture with OGP norms and the level of civil society engagement with OGP processes, and

- suggested how to use those analyses to develop a middle-range theory about MSI policy learning, capable of predicting when and how policy learning will occur.

It’s more complicated than that, to be sure. Check out the paper for details.

The main point here is that socialization can be researched and measured with rigor. Doing so can provide important insights about significant country cases, and can also usefully inform OGP advocacy strategies and country engagement.

With a little bit of work, it can also be used to develop predictive theories. That’s a long term research agenda, but it’s an important one if we want to really understand OGP’s impact. We’d best get started.