…social accountability processes almost always lead to better services, with services becoming more accessible and staff attendance improving. They work best in contexts where the state-citizen relationship is strong, but they can also work in contexts where this is not the case. In the latter, we found that social accountability initiatives are most effective when citizens are supported to understand the services they are entitled to.

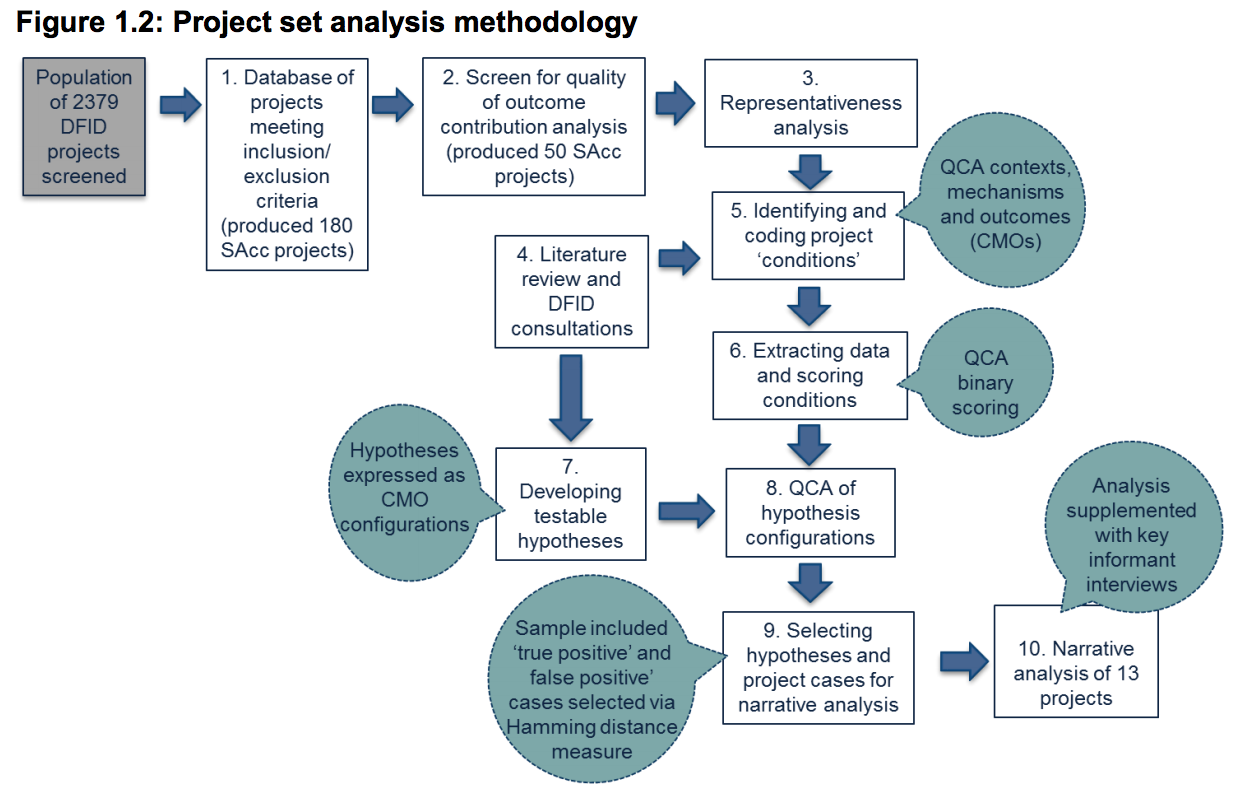

That’s from a blogpost describing a macro evaluation of 50 DFID projects (selected from a pool of 2,379 for their data quality, full report here). The findings here are super interesting (though they’ve been discussed for a while, the final report was published this summer, and the team held a webinar last week). The “almost always” language in some of the findings is a bit over-enthusiastic, given the nature of their project pool and all the hidden factors that play into becoming a DFID project. They don’t really suss out what this means for external validity (generalizatoin gets a 70 word bullet on pg 19). But this is still likely the most evidence-based analysis, and worth further testing in other contexts. Their take on the accountability trap (factors that constrain scaling local wins) is also worth close consideration.

The evaluators also win for their methods. They used Qualitative Comparative Analysis (QCA) to test theory-based hypothoses about what leads to change, complemented by deep within-case analysis. Their application of QCA is similar to Bennett’s typological theorizing, but quant-enhanced, quality controlled with third party comparative data for contextual conditions, without going fully down the stats rabbit hole like fuzzy logic. They were also able to smartly combine the comparative and the within case work. Here’s a visualization from their methods section:

This is an excellent approach for building rigor out of the loose anecdote and assumption that grounds cutting edge field practice. Hoping we’ll see similar approaches coming out of the MAVC research synthesis.

There’s also an annex packed with program narratives, great reading for further thought.